In my previous article, I have explained about deploying your own llm using Hugging Face Inference Endpoints. you can find the link to this article here.

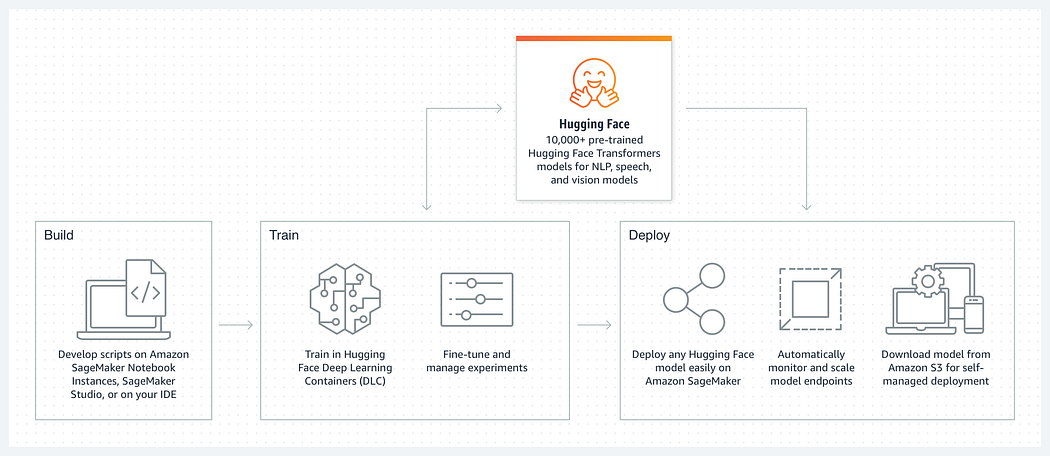

In this article, I will describe LLM learning approaches, introduce Hugging Face Deep Learning Containers (DLCs), and guide you through deploying models using these resources on Amazon SageMaker.

There are multiple ways the LLM can learn things:

- Classic supervised learning: This training approach focuses on training the model on a specific task using a single dataset. The downside is that any changes in the dataset or task require creating and training a new model. This proves challenging when there isn’t enough labeled data for task-specific models.

- Transformation Learning: This approach dominates the LLM and NLP domains. Using transfer learning, models are first pre-trained on large quantities of data to build knowledge independently. This knowledge is then refined (fine-tuned) using a labeled dataset to cater to a downstream task.

The field of NLP saw a significant advancement in 2017 with the introduction of the transformer architecture (Attention is all you Need) by Vaswani and colleagues. This led to the development of transformer algorithms such as BERT, RoBERTa, GPT-2, and DistilBERT, which continue to set the bar high for NLP models regarding tasks like text classification, question answering, summarization, and text generation.

Introduction to Hugging Face Deep Learning Containers (DLCs):

DLCs are Docker images pre-installed with deep learning frameworks and libraries like Transformers, Tokenizers, and Datasets. They offer an immediate start to training models, bypassing the complex task of building and optimizing your training environments from scratch.

The Hugging Face DLCs simplify the process of training Hugging Face transformer models on SageMaker, managing inference infrastructure, and deploying them. The open-source Hugging Face Inference Toolkit for SageMaker boosts the Hugging Face Transformers models, utilizing the SageMaker Inference Toolkit to start the model server which manages inference requests.

Deploying models with Hugging Face DLCs on SageMaker:

Teaming up with AWS, Hugging Face for Amazon SageMaker offers a streamlined solution for deploying and fine-tuning pre-trained models. This collaboration significantly minimizes the time and skill needed to set up and apply these NLP models.

Hugging Face and AWS have co-developed the AWS Deep Learning Containers (DLCs). They provide machine learning (ML) developers and data scientists with a fully managed environment to build, train, and deploy state-of-the-art NLP models on Amazon SageMaker.

Using AWS DLCs, you can deploy:

- Fine-tuned models developed according to your use cases

- Pre-trained models are available on the Hugging Face Hub.

Additionally, AWS DLCs offer a module that permits customization via an inference script, enabling the override of the Hugging Face Handler Service’s default methods. Post-loading the model and obtaining predictions, you can implement a transform_fn method or override the default preprocessing, prediction, and post-processing methods by implementing input_fn, predict_fn, or output_fn methods.

One advantage of using the Hugging Face SDK is that it manages inference containers for you, eliminating the need to manipulate Docker files or Docker registries.

Deploying Your Model:

There are three methods to deploy endpoints, all of which we will explore:

- Deploying a SageMaker-Trained Hugging Face Model: To deploy a model trained on Amazon SageMaker from Amazon Simple Storage Service (Amazon S3), ensure that all necessary files, including the Tokenizer, are saved in a model.tar.gz file. Use model_data to locate your saved model file in Amazon S3. Sample code for this process is available on GitHub.

- Deploying a Model Directly From the Hugging Face Hub: To deploy a model directly from the Hub to SageMaker, you must initialize two environment variables:

– HF_MODEL_ID: Identifies the model ID. This is automatically gathered from Hugging Face when creating a SageMaker endpoint.

– HF_TASK: Identifies the task for the used Transformers pipeline.

This section also includes a list of potential HF_TASK values and a code snippet demonstrating the steps involved. - Deploying a SageMaker Endpoint Using a Custom Inference Script: The Hugging Face Inference Toolkit allows the override of HuggingFaceHandlerService’s default methods by specifying a custom inference.py with model_fn and optionally input_fn, predict_fn, output_fn, or transform_fn. You’ll need to create a code/ directory with an inference.py file within it.

This article has introduced the ML learning approaches, how to use Hugging Face DLCs, and illustrated the processes involved in deploying models using AWS SageMaker.

Step-by-Step Guide to Deploying Models Using AWS SageMaker:

- Create a SageMaker endpoint with a trained model

To deploy a SageMaker-trained Hugging Face model from Amazon Simple Storage Service (Amazon S3), make sure that all required files are saved in model.tar.gz file, including the Tokenizer, and use model_data to point your saved model file in Amazon S3. See the following code:

from sagemaker.huggingface import HuggingFaceModel import sagemaker# create Hugging Face Model Class huggingface_model = HuggingFaceModel( model_data="s3://bucket/model.tar.gz", # S3 path to your trained sagemaker model role=<SageMaker Role>, # IAM role with permissions to create an Endpoint transformers_version="4.6", # transformers version used pytorch_version="1.7", # pytorch version used py_version="py36", # python version of the DLC )

The sample code is available on GitHub.

- Create a SageMaker endpoint with a model from the Hugging Face Hub

You shouldn’t use this feature in production for loading large models; models over 10 GB aren’t supported with this feature.

To deploy a model directly from the Hub to SageMaker, you need to initialize the following environment variables:

- HF_MODEL_ID: Defines the model ID, which is automatically loaded from Hugging Face when creating a SageMaker endpoint. The Hub provides over 10,000 models, all available through this environment variable.

- HF_TASK:Defines the task for the used Transformers pipeline. For a full list of tasks, see Pipelines.

The value of HF_TASK can be one from the following list:

"feature-extraction", "text-classification", "token-classification","table-question-answering","question-answering", "fill-mask", "summarization", "translation", "text2text-generation, "text-generation","zero-shot-classification" or "conversational"The following is a code snippet showing the steps:

from sagemaker.huggingface import HuggingFaceModel

import sagemaker # Hub Model configuration. https://huggingface.co/models

hub = {

'HF_MODEL_ID':'distilbert-base-uncased-distilled-squad', # model_id from hf.co/models

'HF_TASK':'question-answering' # NLP task you want to use for predictions

}

# create Hugging Face Model Class

huggingface_model = HuggingFaceModel(

env=hub,

role=<SageMaker Role>, # iam role with permissions to create an Endpoint

transformers_version="4.6", # transformers version used

pytorch_version="1.7", # pytorch version used

py_version="py36", # python version of the DLC

)The sample code is available on GitHub.

Next, you deploy the Hugging Face model to SageMaker and specify the initial instance count and instance type. For more information about the various supported instance types, see Amazon SageMaker Pricing.

deploy returns a Predictor object, which you can use to do inference on the endpoint hosting your Hugging Face model. Each Predictor provides a predict method, which can do inference with NumPy arrays or Python lists. See the following code:

# deploy model to SageMaker Inference

predictor = huggingface_model.deploy(

initial_instance_count=1,

instance_type="ml.m5.xlarge"

)predict returns the result of inference against your model. By default, the inference result is a JSON serializer. See the following code:

# example request, you always need to define "inputs"

data = {"inputs": {

"question": "Which name is also used to describe the Amazon rainforest in English?",

"context": "The Amazon rainforest (Portuguese: Floresta Amazônica or Amazônia; Spanish: Selva Amazónica, Amazonía or usually Amazonia; French: Forêt amazonienne; Dutch: Amazoneregenwoud), also known in English as Amazonia or the Amazon Jungle, is a moist broadleaf forest that covers most of the Amazon basin of South America."

} }

result = predictor.predict(data)- Create a SageMaker endpoint using a custom inference script

The Hugging Face Inference Toolkit allows you to override the default methods of HuggingFaceHandlerService by specifying a custom inference.py with model_fn and optionally input_fn, predict_fn, output_fn, or transform_fn. Therefore, you need to create a named code/ with a inference.py file in it. For example:

model.tar.gz/

|- pytorch_model.bin

|- ....

|- code/

|- inference.pyIn this example, pytroch_model.bin is the model file saved from training, inference.py is the custom inference module, and requirements.txt is a requirements file to add additional dependencies. The custom module can override the model_fn, input_fn, predict_fn, output_fn or transform_fn methods. For more information, see the GitHub repo.

- Clean up

Make sure you delete the SageMaker endpoints to avoid unnecessary costs:

predictor.delete_endpoint()

Author: Sriram C